Introduction

The rapid advancement of artificial intelligence (AI) has revolutionized various industries, offering unprecedented opportunities for progress and innovation. However, with this power comes the responsibility to ensure that AI is used ethically, responsibly, and in a manner that aligns with human values. An AI acceptable use policy (AUP) serves as a critical tool in guiding the development and deployment of AI systems to prevent misuse and mitigate potential risks.

Purpose of an AI AUP

An AUP for AI establishes clear guidelines and expectations for the use of AI technologies within an organization. It defines acceptable and unacceptable practices, outlines potential risks and consequences, and provides a framework for addressing ethical concerns. By implementing an AI AUP, organizations can:

- Promote ethical use: Ensure that AI is developed and deployed in accordance with ethical principles, such as fairness, transparency, and accountability.

- Protect sensitive data: Mitigate risks associated with the collection, storage, and processing of sensitive data by AI systems.

- Address potential bias: Identify and mitigate potential biases that may arise in AI systems, ensuring fair and impartial outcomes.

- Enhance transparency and trust: Foster transparency in the development and deployment of AI by providing clear information to stakeholders and the public.

Key Components of an AI AUP

An effective AI AUP should include the following key components:

- Scope: Clearly define the types of AI technologies covered by the policy, including machine learning algorithms, natural language processing, and computer vision.

- Acceptable uses: Outline specific use cases where AI is permitted, such as improving customer service, automating data analysis, and optimizing business processes.

- Unacceptable uses: Prohibit the use of AI for malicious or unethical purposes, such as surveillance, discrimination, or privacy violations.

- Responsibilities: Assign clear responsibilities for the development, deployment, and monitoring of AI systems, including researchers, engineers, and business leaders.

- Monitoring and enforcement: Establish mechanisms for monitoring compliance with the AUP and enforcing consequences for violations.

- Updates and revisions: Regularly review and update the AUP to reflect evolving technologies and ethical considerations.

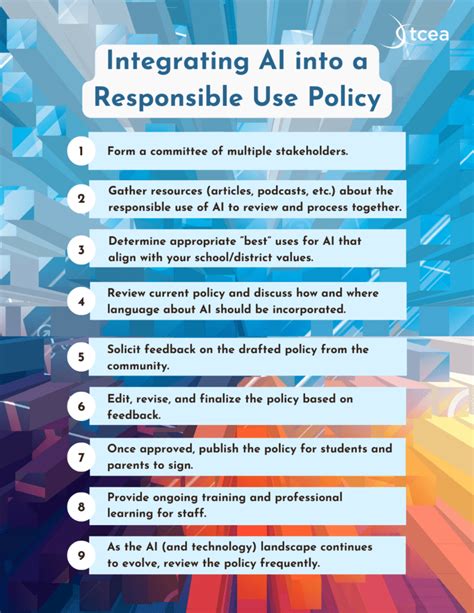

Developing an AI AUP

The development of an AI AUP should be a collaborative process that involves input from various stakeholders, including:

- Technical experts: Engineers, data scientists, and researchers who have expertise in developing and deploying AI systems.

- Legal counsel: Attorneys who can provide guidance on compliance with relevant laws and regulations.

- Business leaders: Executives responsible for making decisions about the use of AI within the organization.

- Ethics committees: Groups of experts who can provide guidance on ethical considerations and potential risks associated with AI.

- Customers and stakeholders: Individuals or organizations who may be affected by the use of AI systems.

Best Practices for AI Use

In addition to an AUP, organizations should adopt the following best practices to ensure the ethical and responsible use of AI:

- Transparency: Provide clear and accessible information about the development, deployment, and use of AI systems.

- Accountability: Hold individuals and organizations accountable for the outcomes and impacts of AI systems.

- Fairness: Ensure that AI systems treat all individuals fairly and impartially, regardless of their race, gender, religion, or other protected characteristics.

- Safety and security: Implement measures to protect AI systems from unauthorized access, manipulation, or misuse.

- Continuous improvement: Regularly review and update AI systems to mitigate risks and address ethical concerns.

Case Study: Real-World Examples of AI Misuse

Numerous real-world examples highlight the potential risks associated with the misuse of AI:

- Facial recognition technology: Used for mass surveillance and privacy violations, without adequate consent or transparency.

- Algorithmic bias: AI systems trained on biased data have led to discriminatory outcomes in hiring, loan approvals, and other decision-making processes.

- Deepfakes: AI-generated videos and audio recordings have been used to spread misinformation and manipulate public opinion.

- Weaponized AI: AI-powered autonomous weapons have raised ethical concerns about their potential to cause harm or death.

Future Trends and Innovations

As AI continues to evolve, new applications and challenges will emerge. Here are some creative new words to generate ideas for future AI applications:

- Synthetigation: The automated generation of synthetic data to enhance training and mitigate bias in AI models.

- Proximalization: The use of AI to intelligently connect people and resources based on their proximity and shared interests.

- Contingency optimization: The development of AI systems that can adapt and optimize plans based on unforeseen circumstances.

Conclusion

An AI AUP is an essential tool for organizations to ensure the ethical and responsible use of AI technologies. By establishing clear guidelines, assigning responsibilities, and promoting best practices, organizations can mitigate risks, protect sensitive data, and foster trust in the deployment of AI. As AI continues to transform various industries, it is crucial to remain vigilant and proactive in addressing ethical concerns and ensuring that AI is used for the betterment of humanity.