This course is designed to provide students with a comprehensive introduction to probability models. Topics covered include:

- The basics of probability theory

- Discrete and continuous random variables

- Joint distributions and conditional probability

- Bayesian inference

- Applications of probability models

Prerequisites:

- STAT 20 or STAT 21 or STAT 24 or STAT 25A or equivalent

- Math 1A, Math 1B, and Math 54 or equivalent

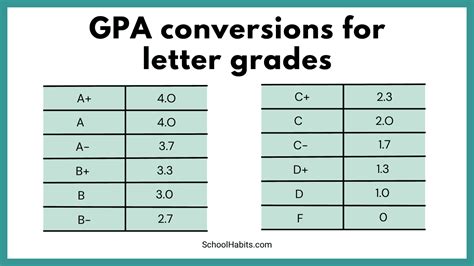

Grading:

- Homework assignments (20%)

- Midterm exam (30%)

- Final exam (50%)

Textbook:

- Probability Models by Sheldon Ross, 10th Edition

Course Outline:

Week 1: Introduction to Probability

- What is probability?

- The axioms of probability

- Conditional probability and independence

Week 2: Discrete Random Variables

- Bernoulli and binomial distributions

- Poisson distribution

- Hypergeometric distribution

Week 3: Continuous Random Variables

- Uniform distribution

- Exponential distribution

- Normal distribution

Week 4: Joint Distributions

- Joint distributions of discrete random variables

- Joint distributions of continuous random variables

- Marginal and conditional distributions

Week 5: Bayesian Inference

- Bayes’ theorem

- Applications of Bayesian inference

Week 6: Applications of Probability Models

- Queueing theory

- Reliability theory

- Finance

Common Mistakes to Avoid:

- Confusing probability with frequency

- Ignoring conditional probability

- Making assumptions about independence that are not justified

How to Approach the Course:

- Read the textbook before each lecture

- Attend all lectures and take notes

- Do the homework assignments on time

- Get help from the instructor or a tutor if needed

- Review the material regularly

Four Useful Tables

| Distribution | PMF/PDF | Mean | Variance |

|---|---|---|---|

| Bernoulli | P(X = x) = p^x (1-p)^(1-x) | p | p(1-p) |

| Binomial | P(X = x) = (n choose x) p^x (1-p)^(n-x) | n*p | np(1-p) |

| Poisson | P(X = x) = (e^(-lambda) * lambda^x) / x! | lambda | lambda |

| Normal | f(x) = (1 / (sigma * sqrt(2pi))) * exp(-(x-mu)^2 / (2sigma^2)) | mu | sigma^2 |

Creative New Word: Probality

Probality is a portmanteau of the words “probability” and “ability.” It can be used to generate ideas for new applications of probability models. For example, we could consider the probality of success for a new product launch. Or, we could consider the probality of failure for a new engineering design. By thinking in terms of probality, we can open up new possibilities for using probability models to solve real-world problems.